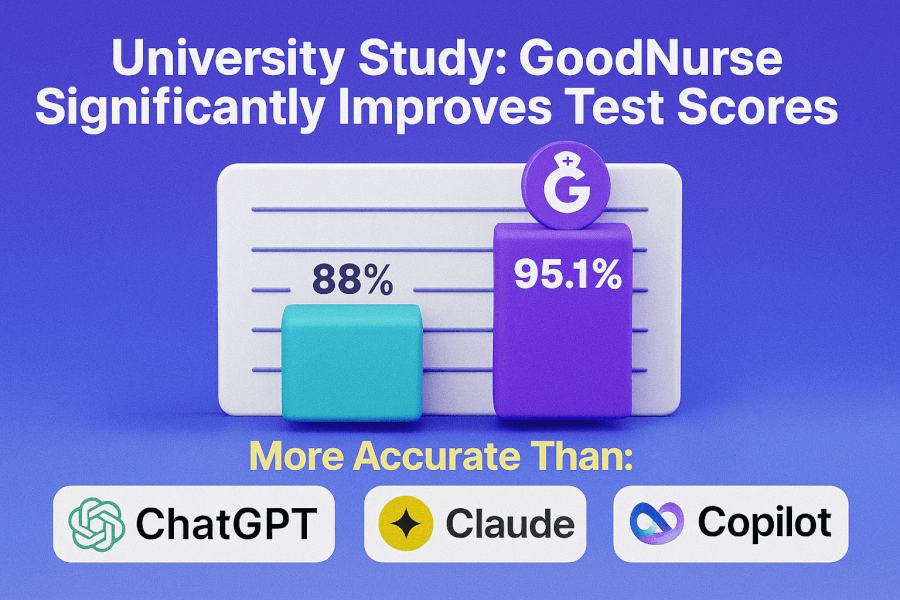

New research from the University of Rochester School of Nursing finds students using the specialized AI tutor achieved significantly higher course grades. A separate study found GoodNurse was more accurate and cost-effective than leading general-purpose AI models.

We are thrilled to share peer-reviewed research from the University of Rochester School of Nursing slated for presentation at the Eastern Nursing Research Society meeting in March 2026. Together, these studies show that GoodNurse is both effective and economical for nursing education, especially for ECG interpretation. For NCLEX integrity and campus assessment policies, see the National Council of State Boards of Nursing and official NCLEX exam-day rules.

🎯 Free NCLEX quiz!

Test your knowledge - new quizzes added weekly!

📈 Win 1: Students Using GoodNurse Achieve Higher Grades

Standout result: GoodNurse users averaged 95.1% vs 88.8% for non-users in a 4-credit ECG course (p=.0048).

Study: Generative AI Improves Nursing Student Performance in an ECG Course (University of Rochester School of Nursing).

Design & sample: Spring 2025 cohort; n = 23 (86% female; 30% with >6 years experience; 91% bedside telemetry). Ten students used GoodNurse; thirteen did not.

Engagement & satisfaction: Mean 69.5 ± 97.9 prompts per student; five “super users” submitted >80 prompts; 52% reported satisfied/very satisfied.

Outcome: Mean course grade 95.1 ± 2.53 (users) vs 88.8 ± 5.85 (non-users), p = .0048.

Cost-effectiveness: Semester ICER ≈ $5.80 per percentage point of grade improvement.

Mini table — Student outcomes & usage

| Metric | GoodNurse users | Non-users | Notes |

|---|---|---|---|

| Mean course grade | 95.1% | 88.8% | 4-credit ECG; p = .0048 |

| Prompts per student (mean ± SD) | 69.5 ± 97.9 | — | 5 “super users” >80 prompts |

| Satisfaction (satisfied/very satisfied) | 52% | — | End-of-course survey |

| ICER (per 1% grade gain) | $5.80 | — | Semester access cost basis |

🏆 Win 2: GoodNurse Outperforms General-Purpose AI on ECG Tasks

Study: Comparative effectiveness of four models on an 88-question ECG exam (URSON).

Models: GoodNurse (specialized), OpenAI ChatGPT, Microsoft Copilot, Anthropic Claude (Sonnet 4).

Item formats: MCQ (14), fill-in-the-blank (3), true/false (20), matching (5), select-all-that-apply (18), free-text (5), ECG image interpretation (23).

Mini table — Model accuracy & cost context

| Model | Overall accuracy | Selected strengths | Subscription (semester) | Cost context* |

|---|---|---|---|---|

| GoodNurse | 85.3% | Best on ECG waveform & matching | $49.95 | $8.06 |

| ChatGPT | 83.1% | Strong general text | $1,000 | $250.00 |

| Copilot | 80.9% | Productivity features | $150 | $83.33 |

| Claude (Sonnet 4) | 79.1% | Some mixed-item wins | $0 | Baseline |

* “Cost context” reflects the abstract’s accuracy-per-cost framing relative to a baseline.

Additional comparative details:

- Across all 88 items, all four models answered 61 correct and 9 incorrect in common; 18 items produced mixed results.

- On those mixed items, correctness rates were: GoodNurse 50%, ChatGPT 33%, Claude 56%, Copilot 39%.

- Waveform interpretation errors (lower is better): GoodNurse 39%, ChatGPT 56%, Claude 48%, Copilot 56%.

- Matching errors: GoodNurse 20%, others 40%.

Why specialization wins: ECG success depends on structured, image-first reasoning (rate → regularity → P waves → intervals → morphology). Domain-specific tuning and nursing-aligned prompts reduce distractor drift and improve fidelity to clinical judgment steps. See foundational skills overviews in the NIH/NCBI Bookshelf.

💡 Why This Matters for Nursing Education

- Measured gains: Statistically significant lift in a high-stakes competency (ECG) that maps directly to telemetry and med-surg readiness.

- Budget-friendly: Positive cost-effectiveness at the course level; see the CDC’s program evaluation pages for plain-language ICER context.

- Policy-aligned: Easy to deploy alongside NCLEX exam restrictions and campus integrity rules.

- Scalable: Start with ECG, then expand to med-surg, pharmacology, and NGN case formats.

Popular onboarding paths (GoodNurse guides):

- How to Read NGN Case Stems

- NGN Matrix/Grid Items: Format & Examples

- NGN Prioritization, Delegation & Assignment

- ABG Interpretation: 15 Practice Cases

- Electrolyte Imbalances Made Easy

- Electrolytes Cheat Sheet

- Ultimate Electrolyte Guide (Pillar)

❓ Frequently Asked Questions (FAQ)

Did students who used GoodNurse really get better grades?

Yes. Mean 95.1% for users vs 88.8% for non-users in the 4-credit ECG course, p = .0048.

How did GoodNurse compare to ChatGPT and others?

GoodNurse achieved the highest accuracy (85.3%) on the 88-item exam and showed the lowest error on ECG waveform interpretation.

Is GoodNurse cost-effective for schools?

Yes. One study calculated $5.80 per 1% grade gain. The model-comparison study also favored GoodNurse on accuracy-per-cost.

What is GoodNurse?

A specialized generative AI tutor designed for nursing education. It supports concept clarification, NGN-style practice, and image-guided ECG reasoning.

🎓 See the Evidence-Based Solution for Yourself

The University of Rochester findings offer a clear, cost-effective path to improve outcomes and diagnostic accuracy.

If your program is exploring AI integration, do not settle for a general-purpose tool. Request a demo of GoodNurse to see how specialization translates into measurable gains.

Resources & Further Reading

Authoritative resources (.edu/.gov/.org)

- University of Rochester School of Nursing

- Eastern Nursing Research Society (ENRS)

- NCSBN: NCLEX Policies & Exam-Day Rules • NCLEX.com

- CDC: Program Evaluation & ICER Basics

- NIH/NCBI Bookshelf: ECG & Clinical Skills